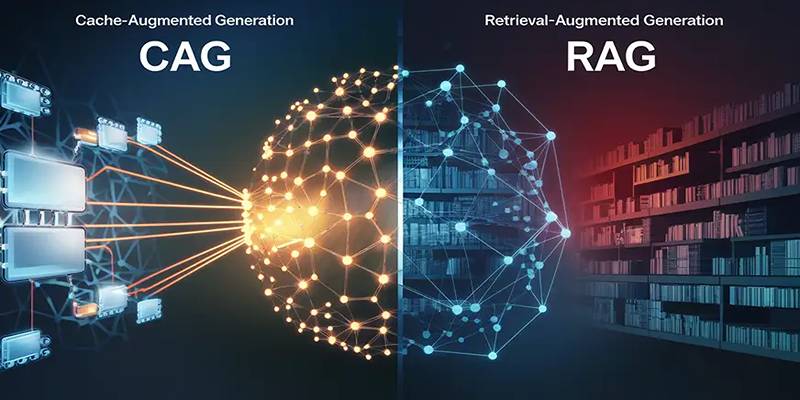

Language models have become a big part of how we use AI in real life. From chatbots to content writing, these models help us every day. Two popular approaches are Cache-Augmented Generation (CAG) and Retrieval-Augmented Generation (RAG). While RAG has been widely used, CAG is a newer method that might offer some better results in specific use cases. But what’s the difference between them? Which one works better? Let’s break it down in simple terms.

Understanding Retrieval-Augmented Generation (RAG)

RAG, or Retrieval-augmented generation, is a way to make the output of big language models better by letting them get outside texts while they're making the models. This retrieval step helps the model produce answers that are both accurate and context-aware.

How RAG Works

A retriever and a generator are the two parts that makeup RAG. The retriever first looks for relevant papers in an indexed knowledge base when a prompt is given to the model. The material that was found is then sent to the generator, which makes a response based on this data. This structure allows the model to go beyond its training data and include up-to-date or highly specific information, making RAG particularly useful for answering fact-based questions or providing citations.

What is Cache-Augmented Generation (CAG)?

Cache-augmented generation takes a different approach. Rather than searching for external documents every time, it enhances the model with a cache—a memory-like component that stores useful past prompts and their corresponding high-quality responses.

When the model receives a new input, it first checks the cache to see if a similar prompt has been answered before. If a relevant match is found, the stored response can either be used directly or influence the new output, saving processing time and improving consistency.

Key Differences Between CAG and RAG

Although both techniques aim to enhance the capabilities of AI models, they operate on fundamentally different principles. RAG relies on external retrieval, while CAG leans on internal memory. This difference impacts their speed, accuracy, and ideal use cases.

Main Contrasts

- Data Source:

- RAG pulls data from external databases or document sets.

- CAG uses an internal cache stored during previous interactions.

- Response Time:

- RAG takes more time due to document retrieval.

- CAG provides faster responses by avoiding external lookups.

- Adaptability:

- RAG is better for topics that evolve or require real-time data.

- CAG is more efficient in stable domains with frequent question overlap.

- Infrastructure Needs:

- RAG needs a document index and a retriever model.

- CAG requires cache storage and similarity-matching mechanisms.

Benefits of Cache-Augmented Generation

For use cases involving repetitive questions or stable domains, CAG offers multiple benefits.

- Reduced Latency: CAG can provide instant responses by eliminating the need to search for new data.

- Improved Efficiency: Since the system doesn’t require a retriever model or document index, resource usage is lower.

- Higher Consistency: Previously validated answers can be reused, improving reliability in customer service or internal tools.

- Simplicity in Setup: CAG systems are easier to implement where a robust retriever setup may not be feasible.

These advantages make CAG ideal for industries like technical support, internal enterprise applications, and educational tutoring systems.

Strengths of Retrieval-Augmented Generation

RAG remains a top choice in many scenarios, particularly where fresh, varied, or complex information is needed.

- Access to External Knowledge: The model can pull data from millions of documents, making it excellent for knowledge-heavy queries.

- Better Context Handling: RAG can provide more nuanced answers by offering rich context from various sources.

- Real-Time Relevance: In fast-changing environments like finance or health, RAG helps deliver up-to-date insights.

For example, a legal assistant tool using RAG can pull from the latest case laws or regulations without needing to retrain the model.

Comparing Real-World Use Cases

To understand the strengths of each approach, it helps to consider some practical applications.

E-commerce Customer Support

In online retail, customers often ask the same set of questions about shipping, returns, and product details.

- CAG excels here, as it can store the best response for each frequently asked question and serve it instantly.

Scientific Research Assistant

A tool designed to support researchers in medicine or physics needs to access the most recent papers.

- RAG is superior in this context, retrieving current peer-reviewed content and adapting to the latest findings.

Programming Helpdesk

AI models supporting developers by answering coding questions benefit from seeing repeated queries.

- CAG performs well in developer tools, where solutions are often reused, and coding styles remain consistent.

Limitations and Challenges of CAG

Despite its strengths, Cache-Augmented Generation is not without its drawbacks.

- Limited by Cache Size: Storing too many entries can slow down performance or require regular cleaning.

- Cold Start Problem: For entirely new topics, the cache offers no help, and the model must fall back on its base capabilities.

Managing these issues requires smart cache updating policies and possibly a hybrid approach with retrieval support.

Can CAG and RAG Work Together?

Yes, a hybrid approach that blends both methods is gaining popularity. Some systems use CAG for high-frequency questions and default to RAG for rare or novel queries.

This combined model delivers:

- Speed from CAG for known inputs

- Freshness from RAG for emerging topics

- Better user experience through lower wait times and improved relevance

Such integration is especially beneficial in large-scale applications where users expect both speed and accuracy.

Which One Performs Better Overall?

There is no one-size-fits-all answer. The better approach depends on the use case.

- For high-volume, low-variance environments like chat support, CAG offers better performance.

- For open-domain or research-heavy applications, RAG remains more powerful.

A strategic evaluation of task needs, infrastructure, and data freshness can help developers choose the right method.

Conclusion

Cache-Augmented Generation and Retrieval-Augmented Generation both aim to enhance how AI models deliver information, but they do so through different means. CAG provides speed and efficiency through memory reuse, while RAG ensures accuracy and flexibility by leveraging external knowledge. As AI systems continue to grow in complexity, selecting the right augmentation method—or combining both—will be key to building smart, scalable, and user-friendly applications. Developers and businesses must assess their specific needs to determine whether CAG’s cache-driven approach or RAG’s retrieval-based method is the best fit.