Natural language processing (NLP) requires lemmatization as an essential process that changes text words into their dictionary base forms, known as lemmas, without altering their contextual meanings. The output of lemmatization produces valid dictionary words because it operates differently than stemming by preventing the removal of word suffixes without attention to linguistic complexities. The article investigates how lemmatization operates, as well as its superiority to alternative approaches, tackles implementation difficulties, and provides examples of practical usage.

The Role of Lemmatization in NLP

The complexity of human language requires words to appear in distinct variations that change based on tense forms, numeric values, and grammatical functions. Effective text comprehension by machines requires standardized formatting of multiple word possibilities throughout the text. Lemmatisation performs word reduction to fundamental forms, which makes algorithms process "running" "ran" and "runs" as equal to the term "run." The performance of NLP models in tasks such as document classification, chatbots, and semantic search becomes better through the implementation of this essential technique in NLP pipelines.

The complexity of human language requires words to appear in distinct variations that change based on tense forms, numeric values, and grammatical functions. Effective text comprehension by machines requires standardized formatting of multiple word possibilities throughout the text. Lemmatisation performs word reduction to fundamental forms, which makes algorithms process "running" "ran" and "runs" as equal to the term "run." The performance of NLP models in tasks such as document classification, chatbots, and semantic search becomes better through the implementation of this essential technique in NLP pipelines.

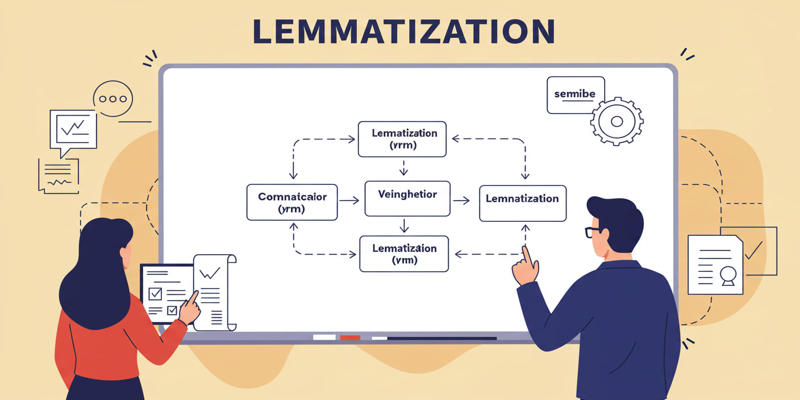

How Lemmatization Works: A Step-by-Step Process

Lemmatization implements a complex procedure that exceeds basic rule-based truncation methods. Multiple analytical processes are necessary to complete the linguistic analysis.

Morphological Analysis:

The system divides words into morphological components in order to recognize their root elements and their prefixes and suffixes. The word "unhappiness" is divided into three morphological parts: "un-" (prefix), "happy" (root), and "-ness" (suffix).

Part-of-Speech (POS) Tagging:

The system uses analysis to detect if a word functions as a noun or verb or functions as an adjective or adverb. It is critical because lemmas may change according to contextual usage. The term "saw" functions either as a verb with lemma "see" or as a noun with lemma "saw."

Contextual Understanding:

The software uses adjacent textual elements to handle word equivocality. During the night the bat flew but later when he swung it served as a sports implement.

Dictionary Lookup:

The algorithm uses the WordNet lexical database to identify base forms in words (lemmas) after completing dictionary lookup procedures.

Lemmatization vs. Stemming: Key Differences

The techniques have opposing methods and resulting effects when it comes to text normalization, although each goal achieves the same end.

- Stemming removes word endings through manual rules, which is left with many unrecognizable words. Through the process, "jumping" collapses into "jump," but "happily" gets restructured as "happili," which produces an incomprehensible part.

- Context and grammatical analysis allows Lemmatization to create valid dictionary terms. The string "Happily" transforms into "happy" and "geese" shortens to "goose."

- Since stemming takes less time yet achieves reduced accuracy levels, it works best for data>{@speed} keyword indexing needs. The process of lemmatization gives better semantic accuracy; thus, it should be utilized in situations that require precise analysis, such as chatbots or sentiment detection.

Advantages of Lemmatization

Enhanced Semantic Accuracy:

Enhanced Semantic Accuracy:

Through lemmatization techniques NLP models understand "better" as an equivalent to "good" while also recognizing "worst" as equal to "bad" which improves tasks among them sentiment analysis.

Improved Search Engine Performance:

The usage of lemmatization by search engines enables users to retrieve all appropriate documents that contain "run" or "ran" when searching for "running shoes."

Reduced Data Noise:

The consolidation of study-related words into the study group within datasets reduces duplicate variants for smoother data processing in machine learning applications.

Support for Multilingual Applications:

Advantageous lemmatization systems work with languages featuring abundant word form creation from single root words including Finnish together with Arabic.

Challenges in Lemmatization

Computational Complexity:

The time required for text processing grows significantly because POS tagging and dictionary lookups are necessary functions.

Language-Specific Limitations:

Despite the extensive development of English lemmatizers, there are inaccuracies in low-resource language tools because of insufficient lexical data.

Ambiguity Resolution:

Context analysis of two separate meanings of "lead" (to guide) and "lead" (a metal) requires sophisticated processing, although errors might occur in rare situations.

Integration with Modern NLP Models:

The subword tokenization method which BERT and other Transformer-based models utilize lowers the requirement for specific lemmatization processes. The practice of lemmatization continues to provide value because it enhances both rules-based applications and human interpretation.

Applications of Lemmatization Across Industries

Healthcare:

The medical system utilizes lemmatization for interpreting patients' statements such as "My head hurts" and "I've had a headache" to create uniform input for diagnostic purposes.

E-Commerce:

Online search platforms transform the terms "wireless headphones" and "headphone wireless" to achieve enhanced recommendation systems.

Legal Tech:

Lemmatised legal jargon enables document analysis tools to recognize termination and terminate as related concepts within contracts.

Social Media Monitoring:

Brands measure consumer sentiment by converting different keywords ("love," "loved," "loving") into their base forms for tracking opinion trends.

Machine Translation:

When applied to translation software, lemmatization allows different language words to match properly, which enhances phrase-level linguistic accuracy.

Tools and Libraries for Lemmatization

NLTK (Python):

The WordNetLemmatizer needs POS tags to be provided explicitly before operation. NLTK lemmatizer replaces "better" when it functions as an adjective with its base form good and transforms the verb "running" into its base form run.

SpaCy:

SpaCy offers an industrial-strength feature set that automatically determines parts of speech and performs lemmatization in one efficient processing pipeline.

Stanford CoreNLP:

The Java-based toolkit provides enterprise-grade lemmatization capabilities for academic work and business applications that support various language sets.

Gensim:

The primary purpose of Gensim is topic modeling but it connects with SpaCy or NLTK to handle text preprocessing operations that include lemmatization.

Future of Lemmatization in AI

The complexity increase in NLP models changes the purpose of lemmatization within the field. Neither neural networks retain full control over word variants, so lemmatization continues to serve as a necessary component for the following reasons:

- Explainability: Translating model outputs into human-readable terms.

- Rule-based lemmatization components work together with deep learning methods to create hybrid frameworks tthat focusson domain-specific text understanding in areas such as medicine and law.

- The development of lemmatizers for underrepresented languages can be improved through multilingual transformers combined with transfer learning methods.

Conclusion

Through lemmatization, NLP systems attain better accuracy and operational speed when processing texts that originate from natural human language. Language model development and search algorithm enhancement, as well as the creation of natural chatbots, demand data scientists and developers to excel at lemmatization techniques. AI technology integration into daily operations depends on lemmatization methodology to reach its maximum human-machine interaction capacity.