3D modeling has long been a cornerstone of innovation across industries—from video games and animated films to product design and medical visualization. Yet, for years, the process has remained complex, time-consuming, and often limited to highly skilled professionals. Now, Tencent’s Hunyuan3D-1.0 is changing the game. This groundbreaking AI-powered tool is transforming how 3D assets are created—in seconds, not hours—offering unmatched speed, accuracy, and creative freedom.

Whether you're a developer crafting immersive game worlds, a designer building prototypes, or a creator visualizing complex concepts, Hunyuan3D-1.0 delivers the tools to fast-track your creative journey without compromising quality. Let’s dive into how this technology works, what makes it revolutionary, and how it opens the door to a whole new era of 3D asset creation.

The Two-Stage Magic Behind Hunyuan3D-1.0

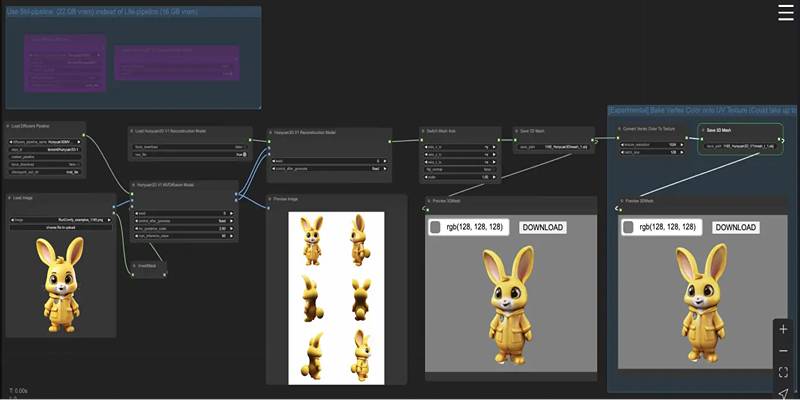

Hunyuan3D-1.0 uses a sophisticated two-stage approach to create 3D models. Let’s break down the steps involved in generating a high-quality 3D asset:

1. Multi-View Diffusion Model

The first stage of the process involves multi-view generation, where Tencent leverages the power of diffusion models to create several 2D images of an object from different angles. These images serve as the visual foundation for the 3D reconstruction.

By building upon models like Zero-1-to-3++, Hunyuan3D expands to a 3× larger generation grid, producing more diverse and consistent outputs. A novel technique called “Reference Attention” helps the AI maintain consistent texture and structure across all views, aligning generated images with a reference input. It ensures that all visual data—from shape to color—is preserved and ready for transformation into 3D form.

2. Sparse-View Reconstruction Model

Once the multi-view images are generated, the second stage kicks in: sparse-view 3D reconstruction. This step uses a transformer-based architecture to fuse the various 2D images into a high-fidelity 3D model.

What makes this process particularly impressive is its speed—the 3D reconstruction is completed in less than two seconds. The system supports both calibrated images (those with known spatial data) and uncalibrated images (user-provided images without precise metadata), giving creators flexibility in how they provide input.

Key Features of Hunyuan3D-1.0

Tencent Hunyuan3D-1.0 stands out for its ability to rapidly convert 2D visuals into textured, high-fidelity 3D assets. Below is a detailed breakdown of its most powerful capabilities:

1. Image-to-3D Conversion

The most impressive feature of Hunyuan3D-1.0 is its ability to transform a single 2D image into a complete 3D model—something that typically requires complex workflows or multiple input views.

What it offers:

- Uses AI to infer shape, structure, and perspective from just one image

- Reconstructs hidden surfaces based on learned object patterns

- Works with a wide variety of object types, including shoes, bags, furniture, and more

- Reduces the need for manual 3D modeling or photogrammetry scans

- Ideal for rapid conception, prototyping, and virtual asset creation

This feature opens the door for anyone—from designers to marketers—to generate professional 3D models without needing technical expertise in 3D software.

2. Ultra-Fast Processing Speed

Speed is where Hunyuan3D-1.0 truly shines. It delivers results that traditionally took hours in just a few seconds.

Performance highlights:

- Generates a complete mesh and texture in 2 to 3 seconds

- Optimized for batch processing and high-volume use cases

- Enables real-time feedback loops in the creative workflow

With this level of efficiency, teams can test ideas and iterate much faster—bringing concepts to life at the pace of imagination.

3. High-Fidelity Mesh and Texture Generation

Quality hasn’t been sacrificed for speed. Hunyuan3D-1.0 delivers professional-grade results that are usable straight out of the model.

Mesh quality benefits:

- Produces watertight 3D meshes essential for rendering, animation, and 3D printing

- Ensures smooth surfaces, consistent topology, and accurate proportions

- Supports detailed UV unwrapping and clean geometry output

Texture generation capabilities:

- Accurately reflects surface materials, colors, and patterns from the source image

- Maintains lighting and shading realism

- Outputs models that are ready for PBR (physically based rendering) workflows

It means less time cleaning up models and more time putting them to use.

4. Support for Multiple Industry-Standard 3D Formats

Hunyuan3D-1.0 outputs models in widely supported formats, making integration into design pipelines easy and seamless.

Supported formats include:

- OBJ: Versatile format used in most 3D tools

- FBX: Preferred in gaming and animation for mesh + animation data

- GLB/GLTF: Ideal for web and AR/VR experiences

Benefits of format support:

- Direct import into Blender, Unity, Unreal Engine, Maya, and WebGL platforms

- Simplifies the transition between AI modeling and manual touch-up or animation

- Reduces file conversion needs and improves compatibility across software ecosystems

This multi-format approach ensures Hunyuan3D-1.0 fits into any creative workflow with minimal effort.

5. Custom Training for Specialized Industry Needs

Tencent designed Hunyuan3D-1.0 to be versatile, but it also supports custom fine-tuning for specific sectors and object categories.

Custom training advantages:

- Models trained on fashion datasets perform better on clothing and accessories

- Variants tailored for game assets focus on real-time performance and reduced polygon counts

- Can be trained with user-specific datasets for unique object categories or styles

This ability to adapt makes Hunyuan3D-1.0 scalable and useful in highly specialized use cases—from AR mirrors in fashion retail to object scanning in architecture.

Getting Started with Hunyuan3D-1.0

Getting started with Hunyuan3D-1.0 is simple, especially for developers familiar with Python and machine learning tools. The platform offers a straightforward installation process and provides pre-trained models for different use cases. Here's a basic overview of how to get started:

- Clone the repository: Download the Hunyuan3D-1.0 repository from GitHub to your local machine.

- Set up the environment: Use the provided installation script to set up a Conda environment with the necessary dependencies for running Hunyuan3D-1.0.

- Download pre-trained models: Hunyuan3D-1.0 provides pre-trained models on platforms like HuggingFace, which can be downloaded and used for various 3D asset generation tasks.

- Run the inference: Once the environment is set up, you can run the model to generate 3D models based on text or image inputs.

Conclusion

Tencent’s Hunyuan3D-1.0 represents more than just a new modeling tool—it’s a paradigm shift. By automating, accelerating, and refining the 3D creation process, it brings powerful AI capabilities to creators across industries. The blend of diffusion modeling, adaptive guidance, and super-resolution not only ensures accuracy and realism but also does so with blazing speed. As digital experiences grow more immersive—from the metaverse to AR shopping to virtual healthcare—3D content is becoming the language of the future.